Theorymaker - a language for Theories of change.

Steve Powell

Press your space bar or arrow keys to begin

Overview of sections

SECTION 1: Theorymaker is …

… a visual language for project Theories and “Theories of Change” providing three things:

a grab-bag of optional graphical elements to express many ideas (like the “strength” of a causal connection), which can be used with PowerPoint, pencil-and-paper, etc, as well as in a text-based web app.

a way to display, talk about and even do “soft calculations” with things, including effects and impacts, which we only know about vaguely or incompletely.

a unified way to talk about Theories and Theories of Change, providing:

- definitions of ideas like “Variable”, “Influence” etc

- optionally, elements to help Theories embrace complexity

- theory-based suggestions for how to use Theories e.g. in evaluations

- a common tool-kit to express and compare specific models of, and templates for, Theories of Change (from Logical Frameworks to Fuzzy Cognitive Maps) as special cases of a more general framework ℹ If this rather ambitious aim succeeds, it means that Theorymaker can be a kind of universal framework for Theories of Change. So if we can establish, for example, a convincing definition of a concept like “effect” within Theorymaker, we can apply it to other models (e.g. Logical Frameworks) too. This claim will be explored in the “Fundamentals” sections of each slide - try clicking now on the button at the top of this slide .

| Contents of this section | |

| These slides … | |

| Who’s suspicious of Theories of Change? | |

| Keep it simple | |

| → Next section |

Some slides, like this one, have a section labelled “Fundamentals”. They are meant for the serious reader. When complete, they will provide formal framework for understanding Theories of Change. The casual reader will probably want to skip them!

If you want to read all the slides together in one PDF, click the print icon above.

These slides …

- include a small “Clone” link above each diagram: click to edit a version of the same diagram at theorymaker.info.

- include symbols like this: ℹ Here is some extra information move your mouse over them to see more.

- sometimes have links in the top-right with names like “Notes”; move your mouse over them to see more.

- are available at http://www.pogol.net/public/slides.html.

- are available in a printable PDF version - or click the “print” icon.

If you’re looking for a simple introduction to Theories of Change, try this first.

Sign up for occasional email updates on Theorymaker.

Any questions about Theorymaker or the apps, contact me: steve@pogol.net

Here are some notes for this slide.

Who’s suspicious of Theories of Change?

There are plenty of reasons to be suspicious of Theories of Change. Perhaps they:

- are unrealistic, too optimistic?

- are not flexible enough, don’t reflect how even the big features of plans change?

- don’t reflect the details of hands-on management and how the little features change?

- don’t reflect how new problems (and responses) can emerge?

- are too worried about measuring things in numbers?

- don’t account for complexity of different stakeholders with different visions?

- assume “everything is linear”?

Theorymaker is intended to provide a framework for Theories of Change which is broad enough to accommodate all these criticisms.

But these slides do not deal with the practical or political aspects (who creates Theories of Change, how and why) - even though these aspects are very important.

Keep it simple

Theorymaker suggests a variety of symbols to make a variety of distinctions, for instance between different kinds of Variables. But it is not compulsory, for example, to display the symbol for binary Variables on all binary Variables. Only use them if you need to highlight that particular distinction. The symbols which Theorymaker provides fit together into a complete “language”, but you can just use the bits you need, like a grab-bag of ideas.

Only use the symbols you need, just enough to avoid ambiguity.

Feel free to use just one or two of these elements or ideas in your Theory of Change. There is no club to join or product to buy!

SECTION 2: Get started with some examples

This section gives a few examples of Theories of Change drawn with Theorymaker symbols and ideas. They are all based around the same very simple project.

| Contents of this section | |

| Village resilience: an example | |

| Village resilience as a defined Variable | |

| Village resilience: all or nothing | |

| Village resilience: showing the Difference made by a project |

Village resilience: an example

This is a simple diagram to show the outlines of an intervention to increase the resilience of a village.

There are two interventions shown by “▶ ” symbols, each representing some project activities. The influence of the livelihoods intervention on family income is (hopefully) “plus” (⊕), meaning that any increase results in an increase in income. The influence of the preparedness intervention on flood risk is (hopefully) “minus” (⊖).

Resilience is not shown as a separate Variable, but as a box which includes family income and (low) flood risk.

The two interventions are simplified here as all-or-nothing (“◨”) Variables, the “switching on” of some activities which either do happen or don’t. The other two are shown as continuous “lo-hi” (“◪”) Variables - they can have any value from low through to high.

Family income and reducing flood risk are shown as things to be desired (or avoided), shown by the ♥ and 🙁symbols.

Village resilience as a defined Variable

This is similar to the previous diagram. Now two orange boxes are used just help to organise the diagram. They are not part of the causal story.

Resilience is now shown as a Variable in its own right, but as something defined in terms of income and flood risk rather than caused by them. Defined Variables are shown with dashed lines.

To assess resilience (in this model) we would just assess flood risk and income and combine the information in some way: it wouldn’t make sense to measure resilience separately, so it is given a dashed border. (For example, we might conceive of resilience as being somehow the “sum” or “average” of the two contributing Variables. However, this diagram is about the underlying ideas, not on the details of measurement or “indicators”.)

Resilience is not shown as being valued in its own right in this case - it has no ♥ or 🙁 symbol - it is merely valuable because the other two Variables are.

Village resilience: all or nothing

This diagram is similar to the previous one. But this time, flood risk and family income are not valued on their own (they have no “♥” or or “🙁” symbol) and they are conceived of as all-or-nothing. If both these things happen (their arrows are joined and have an “AND” symbol) we declare that the village is now “resilient”, again presented as a simple case of yes or no (“◨”). As in the previous example, resilience is not conceived of as a further Variable downstream which is causally influenced by income and flood risk. Instead, it is defined in terms of them. And this time, we do not care about the flood risk or the family income on their own: we only care about this critical level of resilience, perhaps because our funding depends on it.

The management of a project with this Theory of Change might behave quite differently from the previous example:

- Once a village achieved the critical income target, work on that could stop in favour of work on the flood preparation target, and vice versa

- Once one village achieved this critical resilience target, it would no longer be interesting for project management, who might focus on other villages

Village resilience: showing the Difference made by a project

This diagram is similar to the first one, but now each Variable also presents information about projected performance with and without the project. We might see this kind of diagram after the project is over as the summary of an evaluation. Or we might see it before the project has even begun, as a way of expressing targets - what we hope to be able to achieve: the Difference we will make.

The ✔ symbol shows that the intervention happens rather than not happening. The Difference made to family income has even been quantified: $5 rather than 4. This “$4” is what would have happened without the project, which is not necessarily the baseline score, as income might be changing anyway.

In Theorymaker, we usually express Differences within double brackets, either as a summary such as, say, “improved” or as a formulation with “rather_than” (like we did here for flood risk). The ✔ also shows a Difference - implemented rather than not implemented.

SECTION 3: Main symbols and elements

How to get these symbols

Using pencil & paper? Just draw them!

Using PowerPoint etc? Just copy and paste them from this page.

In the Theorymaker text app, to add these symbols to a Variable, just add the code in the right-hand column, e.g. !up, to the name of the Variable. But how to add them to an arrow? You create an arrow to How warm you feel from Strength of wind by indenting the latter by one space, like this:

How warm you feel

Strength of windAnd you can add a label, e.g. ⊖ to an arrow by typing the code for it (!minus) in brackets just after the space, like this:

How warm you feel

(!minusarrow)Strength of windVariables: things that could be different

Variables: things that could be different. We represent them with words written inside simple shapes, usually rectangles. They are the basic building blocks of Theories. Ideally we also indicate the range of possible Levels the Variables can take (e.g. yes and no, low through to high, etc). We call them Variables - with a capital V.

This kind of network diagram can be very useful but these rectangles are not Variables but things.

Now, these are Variables. They can take different levels or values:

- here, one Variable can be zero or very high, or anywhere in between.

- the other can be only “for” or “against”

In these slides we will not distinguish much between the Variables and Theories as communicative acts, i.e. things we write down and share, and what they represent.

Conventional descriptions of Theories of Change rarely discuss what their basic elements actually consist of.

Simple Theories: showing the causal influences of one or more influence Variables on a consequence Variable

We use arrows to link one or more Variables (the “influence Variables”) to another (the consequence Variable"). Meaning: the influence Variables causally influence the consequence Variable: if you manipulate the influence Variables, this will make a difference to the consequence Variable. The combination of influence and consequence Variables (and the arrows joining them) is called a simple Theory (with a capital T).

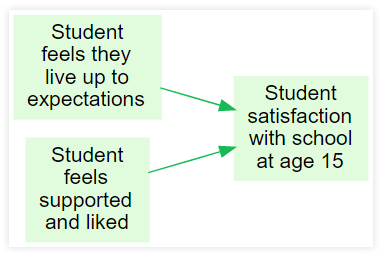

This diagram shows how two Variables influence another Variable …"

“How satisfied is the student? It depends on their feeling of support, of living up to expectations , …”

Simple Theories are nuggets of practical information about the influences on a Variable:

- more or less accurate rules of thumb, help us to understand how the world works

- more or less general or specific

It’s our job to provide the best Theories with the best evidence! In any give situation, many different, overlapping Theories can usually be applied.

Essentially, a simple Theory is nothing more than an equation of the form

V=f(U1,U2...) | C

which expresses some variable V in terms of some other variables U1, U2 … via a function f (given a particular context C). For example,

Student satisfaction with school =

f(How much student feels that they live up to expectations,

How much student feels supported and liked)The fun starts when we ask about the nature of these variables, what kinds of functions are allowed, etc. Theorymaker points out that even when many of these things are not completely defined, still we can do some reasoning with sets of such equations - see Soft Arithmetic.

How do we express the causal relationship? It isn’t equality

\[V=f(U1,U2...) |C\]

This “=” is neither equality nor logical implication.

Our causal knowledge is captured by:

\[acceleration=f(force) |mass=m\]

… but, surprisingly, not (at least, not in the same way) by:

\[mass=f(force) |acceleration=a\]

… because if we intervene to change the acceleration, we don’t change the mass.

How do we express the causal relationship? It isn’t logical implication.

This logical implication is true (because if the left-hand-side of an implication is true, the whole expression is true.)

\[We\,are\,on\,planet\,Earth\, \Rightarrow \, The\,sky\,is\,green\]

… but it doesn’t capture any causal knowledge:

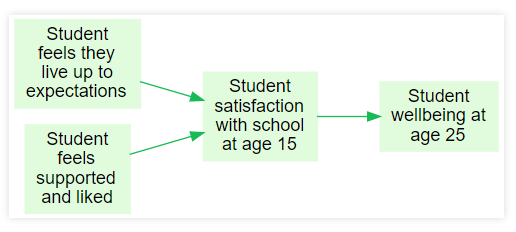

Joining together simple Theories into composite Theories

… we can join them together into a “composite Theory”.

A composite Theory is two or more simple Theories “snapped together” (providing there are no loops): ℹ This does not mean we can’t introduce “feedback loops”. See later. The resulting network is a DAG - a Directed Acyclic Graph, see (Pearl 2000)

A Theory is either a simple Theory or a composite Theory.

So as a composite Theory is just a set of simple Theories, from a formal point of view, a composite Theory is just a set of equations (see the “Fundamentals” tab in the previous slide)

So, given a set of separate simple Theories,

\[V=f(U1,U2...) |C\] \[W=f(X1,X2...) |D\] …

… we get a single, composite Theory, which is true in the intersection of the contexts.

\[V=f(U1,U2...) |C \cap D\] \[W=f(X1,X2...) |C \cap D\]

“…|C” means “given a particular context C”.

(“Ministeps”: (Birckmayer and Weiss 2000))

Talking about Theories using Words in Capitals: Variable, Theory …

In the previous slides, we saw these words …

- Variable

- Simple Theory

- Composite Theory

- Theory

… introduced for the first time in bold, together with their meanings.

We will use these words (in capitals)ℹ More technically, these Words in Capitals are part of the Theorymaker meta-language to speak about the diagrams we will see in these Theorymaker slides.

We also saw how the Variables at the beginning of a simple Theory are called the “influence Variables” and the other Variable is called the “consequence Variable”. These are useful extra words for talking about the parts of a simple Theory.

Also, we call Variables “at the end” of a Theory, which have no consequence Variables “no-child” Variables, and we call Variables “at the start” which have no influence Variables “no-parent” Variables.

Indicating the possible Levels a Variable can take, by listing or describing Levels: … or using symbols

A Variable label can contain information about the different Levels the Variable can take, by actually listing them like this: “Levels: red, orange, green” or in some other way, e.g. “Levels: counting numbers” or by using symbols – see next slides. This isn’t compulsory, but can help clarify what kind the Variables are.

Some kinds of Variable have special names in the Theorymaker language; two of the most common kinds are “lo-hi” (symbol: ◪) and “false/true” (symbol: ◨).

Different types of Variable

It is very useful to understand different types of Variable we see in Theories of Change, and what they have in common. Here are some broad types, based on the kinds of levels they can have.

An ordered Variable is one whose levels can be put into some order. ℹOrdered Variables are not to be confused with “ordinal Variables” which are a special kind of ordered Variables with a discrete number of non-numerical values, like the results of a Likert scale

A continuous Variable ℹIncludes both interval and ratio Variables (Stevens 1946) is an ordered Variable where, given any pair of levels, you can always find another level between them. A Variable which is not continuous is called discrete.

A directed Variable is ordered (continuous or discrete) but also, the order is not symmetrical: one direction is preferred or more positive (higher, better, bigger, truer …) than the other.ℹ“Directed Variables” are not part of standard social science or evaluation terminology. But they are really useful to help us with our aim of making it easier to talk about Variables and influences for which we have only vague or incomplete information. Ordered Variables which don’t have a specific, asymmetric direction are not so frequent. Usually one direction (bigger, hotter, further, more approving…) is understood as primary, and the opposite direction is, well, just the opposite.

Almost all Variables ℹAt least, almost all Variables which can be measured, in contrast with, say, narrative Variables. in Theories of Change are directed:

Continuous numbers, e.g. Weight of supplies delivered

Counting numbers, e.g. Number of visitors to a website

“Ordinal” Variables, e.g. alert level in a national warning system (levels = all clear, mild threat, severe threat)ℹThere aren’t so many naturally occurring examples, but plenty of constructed ones like scores on a Likert scale

Lo-hi Variables ◪ e.g. level of approval for a President

False/true Variables - Binary Variables in which one level is in some way the correct or preferred one. Basically, these are most affirmative sentences e.g. the law is passed. ℹIn most languages, like English, we don’t usually say that if a false/true Variable switches from no to yes, that this is a positive change or an increase. We dont always see a that this is a bit like a change from small to large or from bad to good. But in Theorymaker, we do. It might seems strange, but it is really useful, because it simplifies our key task of providing ways to talk about influences which we only have imperfect or inaccurate information about.

Some Variables which are not directed:

- Any “nominal Variable” like country of birth, colour of traffic light, including binary Variables (like “heads/tails”) which have no preferred level.

- Circular / cyclic Variables e.g. day of the week, season. ℹThese are similar to ordered Variables because late spring is a bit later than early spring in the same way that early summer is a bit later than late spring; but is winter earlier or later than summer? a Variable which is not directed is longitude on the Earth’s surface - “westwards” is merely the opposite of “eastwards” - neither direction is primary. Although this example doesn’t really work either, because longitude is not fully ordered in the usual sense: is 0 degrees greater or smaller than 180 degrees? Cyclic Variables seem to be mostly of this type, e.g. also hue on a colour wheel.

- Hierarchical Variables: e.g. taxonomy (species classification)

- “Narrative”, “emergent” and “rich” Variables.

◨: False/true Variables

This symbol: ◨ means that this Variable can take only two Levels - “false/true”, “no/yes” etc.; and that the Variable has a direction: one level (e.g.

true) is somehow important, preferred or correct, in contrast to the other. We call these false/true Variables - a kind of directed Variable. ℹ false/true Variables are directed binary Variables. In logic, they could be called “propositions”. There are other binary Variables, i.e. Variables with only two levels, such as “does the coin land as heads or tails” which we don’t classify as false/true Variables because the levels are symmetrical, interchangeable, they don’t have one preferred level. .

Variables with only two levels are Variables too.

When a Variable is numeric, say, “number of children attending a session”, it is quite easy to recognise it as a Variable. But when Variables are expressed as false/true propositions or statements, like “the law is passed”, it can be harder to grasp that they too are Variables, with just two Levels. Theorymaker suggests using the “◨” symbol for them. Usually we don’t need to specify exactly what the Levels are (false/true, no/yes etc) because it is obvious from the context.

Sometimes logframes and Theories of Change are expressed only using binary, false/true Statements. But they don’t have to be.

Why do we say “false/true” rather than the more usual “yes/no”? Because mostly when talking about directed Variables we mention the lower end of the scale first, e.g. when we say “1 to 100”.

false/true Variables and lo-hi Variables are the most obvious examples of directed Variables which are by far the most frequent kinds of Variable we see in real-life Theories of Change. If we an understand these two kinds, and the fundamental similarities between them, we have gone a long way to understanding most of the Variables we will meet in real-life Theories of Change.

◪: “lo-hi” Variables: e.g. “amount, of agreement”. Range from “none” or “low” to “as high as possible”.

This symbol: ◪ means that this Variable can be low, or high, or any Level in between, e.g. percentage, amount of agreement. We call these “lo-hi” Variables – a kind of directed Variable. Five characteristics, see below.

Key characteristics of lo-hi Variables:

- directed ℹAny possible level can be compared with any other possible level: stronger or weaker, higher or lower; and this direction is not symmetrical: the shifts from poor towards rich, lower towards higher, empty towards full, bad towards good, are all in the same, positive direction, unlike e.g. the shift from red towards yellow. We humans have really simplified our worlds in constructing these kinds of Variable.

- continuous, not discrete

- have a minimum and a vague maximum. ℹFor example, the relevance of a project activity or the fit of a species into an ecological niche or the quality of a particular goal in soccer or of a novel - there is no clear maximum, and for any one particularly high-level example it is always just about possible to imagine a higher one. But in practice, trying to imagine an “even better goal” than a particular extraordinarily good goal becomes a hopeless exercise.

- roughly scaled but not numerical ℹ That means in particular that although the “distance” from, say, a poor goal to a good goal is roughly comparable to the “distance” from a good goal to a very good goal, there is no readily available way to be sure and therefore to construct an actual numerical scale. It might be possible to construct one but we don’t have one yet and we understand one another anyway. Tip: When sketching your ToC, don’t worry about putting your Variables into numerical form! Numbers are “too accurate” for most

lo-hiVariables. We can still do serious reasoning withlo-hiVariables using Soft Arithmetic. - as a consequence, levels can be compared between even quite different Variables.

We have always been told that, in serious science, Variables are best modelled with actual numbers. ℹ“Lo-hi” Variables have some strong similarities to those in Fuzzy Set Theory (Ragin and Pennings 2005): there is a clear direction, and a maximum and minimum, but the underlying ideas are quite different. But how are numbers a good fit for, say, extent of approval? Numbers go on forever, and approval doesn’t: it has some kind of vague, natural limit; it’s a lo-hi Variable.

Examples:

- “the President’s support for the new law”

- “quality of implementation”

- “intelligence of the child” ℹThis one is arguable; some might claim that there could be a person of arbitrarily high intelligence. But it really isn’t clear what an IQ of, say, 2000 means, or who would design a test for it.

- “level of tolerance”

- “an actor’s level of fame”

- “literary quality of the writing”

- “innovativeness of the new approach”

- “level of satisfaction”

We use “lo-hi” Variables inside simple Theories any time we say anything like “the more, the merrier” or “the more lobbying you do, the more support you will get:”

Comparability of levels between different lo-hi Variables

Whenever we want to use a Likert-style question as an “indicator” for an underlying Variable, this Variable is probably lo-hi. Quantitative scientists usually presume that what is “driving” a Likert-type indicator has to be an underlying numerical Variable. Theorymaker encourages us to ask: why does it have to be “really” numerical, why can’t it be really lo-hi?. ℹ Scores on Likert scales, like “How much do you approve of the President (Levels: not at all, somewhat, completely)” are similar to lo-hi Variables but they have a discrete number of levels. If you ask someone to make a mark on a line to correspond to their approval, the resulting Variable is a perhaps a lo-hi Variable, though the maximum is clearly defined.

But how do we actually record, give names to, scores on lo-hi Variables? One way is to say e.g. “in the top third” or “in the bottom half” or “in the top ten percent” (or: “in the top decile”). We can select, say, deciles or even percentiles rather than halves or quarters when we are expressing greater confidence in this (essentially rough) assessment. It seems we mostly do this by imagining ordering different cases in our minds and then, say, noting that a child is amongst the tallest quarter of the children. We seem to be able to do this even when the reference group of cases we are to put into order are not specified and don’t have a clear maximum or minimum. See also this.

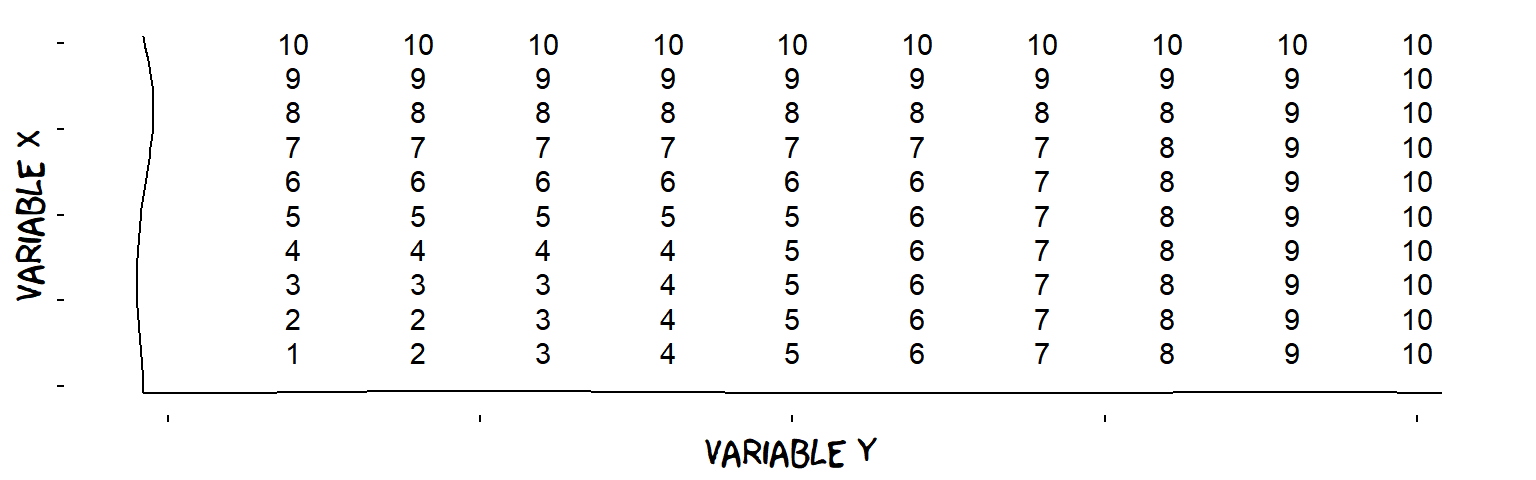

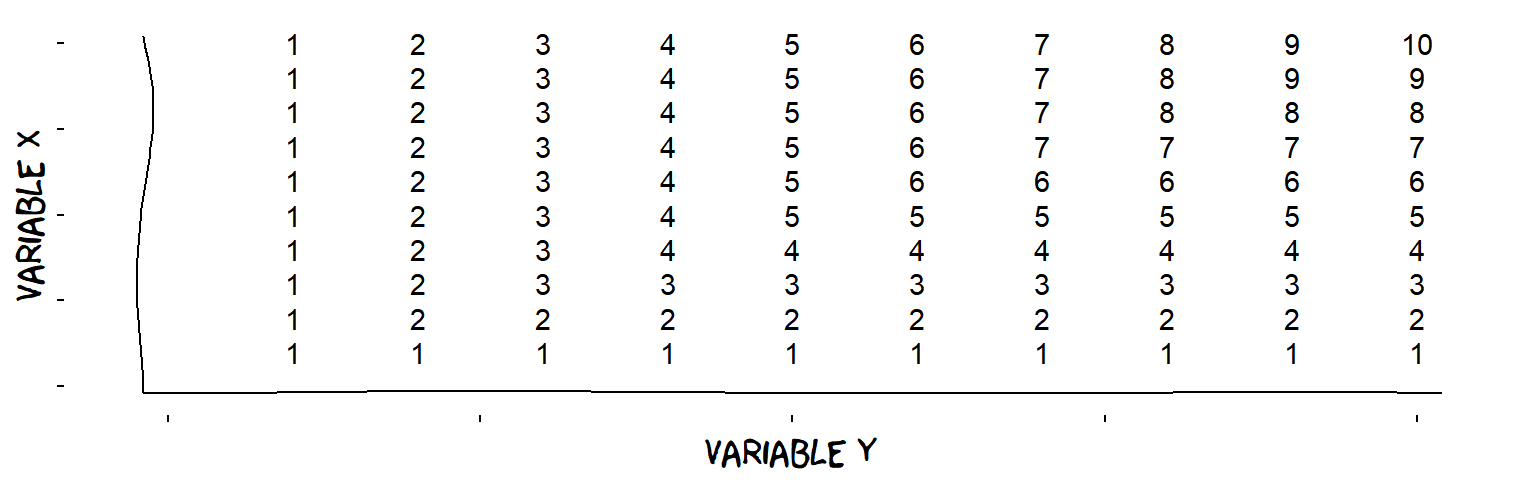

Perhaps surprisingly, it is possible roughly to compare levels on one directed Variable with another level on a completely different Variable. So for example, most people don’t have much difficulty in naming an actor as famous as the “Sex Pistols” were revolutionary (Kahneman 2011). We seem to be able to do it directly, not only via the intermediate step of saying “the Sex Pistols were in the top one percent of revolutionary bands, so I need to think of an actor who is in the top one percent of fame”.

Evaluation is bursting with lo-hi Variables. How relevant was the project, how satisfied were the stakeholders …

►: “Intervention symbol” for Variables we intervene on

In many Theories, the “no-parent” Variables (the ones with no influence Variables contributing to them) are assumed to all be under the control of our project.

But of course the world isn’t like that.

Sometimes it can be really useful to show where we intervene and where we don’t.

Make it clear which Variables you are intervening on, by marking them with an intervention symbol: ► and also making sure it is clear what the intervention does – for example, launching our campaign (rather than not launching it).

Usually, when we include intervention variables, the downstream variables are also expressed in such a way that the consequences of the intervention can also be read off them, e.g. “Law on same-sex marriage is passed (rather than not passed)” – as opposed to, say, “Whether the law on same-sex marriages is passed”.

The other no-parent Variables in the diagram are not under our control. In this example, they have also been expressed as Statements (“Celebrities speak out” – rather than not speaking out.) The difference made to the downstream variables such as “Legislators have more favourable views” take these Statements or assumptions into account.

Use “♥” for Variables we value, or “☹” for Variables we don’t

In many Theories, the “no-child” Variables (the ones with no consequence Variables) are all assumed to be ones we care about (and the only ones we care about).

But of course the world isn’t like that.

Sometimes it can be really useful to show which Variables we value, e.g. by marking them with a heart ♥. (We can also use a “frowny” symbol: 🙁 for things we don’t want.)

In this case, we value the legislators and the public having a more positive attitude to same-sex marriage not only because they are more likely to pass the law, but for other reasons we aren’t mentioning here.

It’s also possible to write more than one symbol, for example: ♥ ♥ ♥, to distinguish, say, between things we value a little and which we value a lot. Or just write e.g. “Value=high” or “Value=low”.

What makes a Theory into a Theory of Change?

A Theory of Change is someone’s Theory about how to get something they want by intervening on something they can control.

A school Principal has this Theory …

So this is a Theory of Change (for the Principal):

- she believes the Theory

- she values at least one Variable

- she controls at least one Variable

Theorymaker symbols:

► = we can control this

♥ = we value this

This example happens to include just three Variables in a chain-like structure. Countless other structures are possible.

Listing assumptions on the arrows

Text added to an arrow, preceded by the word “Assumption:” or “Assume:”, shows that there is actually an additional influencing Variable. This is just an abbreviation: it saves space rather than having to actually add the additional Variable to the diagram.

This Theory is fine, but we can also simplify it …

… like this:

An “assumption”, expressed like this, is nearly always a “false, true” Variable like “Celebrities speak out” rather than, say, a continuous Variable (like “How much celebrities speak out”). ℹAlso, we probably imply something about the factual level of these assumption Variables - see later

Bare arrowheads for unnamed additional influences

Bare arrowheads (without any lines) are used to show that there are some other influences in our Theory of Change; we can’t, or don’t want to, specify what they are but we don’t want to forget them.

The additional green arrowheads, like here on the Variables “Law is passed” and “Public opinion improves”, remind us of the presence of other, unnamed, influences.

Showing specific features of Variables, like who (or what) they belong to, like this: “Child: …”

We can specify who or what a Variable belongs to, by mentioning the ‘owner: …’ at the start of the Variable name. We can do the same for any other feature which a Variable might have, e.g. time-point, country, county … and which might distinguish it from other Variables.)

This suggests that somehow, trust in strange dogs depends on opportunities for interaction.

Grouping boxes for Variables with similar features

When the labels of several Variables begin with the same feature or owner e.g. “Child:”, they can be surrounded by a grouping box; that text is then deleted from those Variables and added to the box. It’s the same thing, it just saves a few words and helps structure the diagram.

This Theory says that trust in strange dogs depends on opportunities for safe interaction.

Both Variables have a special feature (Child:...) which tells you who or what the Variable belongs to.

Other kinds of box are summarised later.

Deleting identical information from both Variables (the fact that they belong to the child) and transferring it to a box which groups them:

The grouping box also reminds us that these two Variables belong to the same child. That has very important practical consequences.

One Variable for each person in a group

The words “For each” at the start of a Variable label, followed by a (explicit or implicit) list of people, time-points etc., says that this is actually a set of Variables, one for each person or thing in the list.

The same teacher teaches three different students - his ability contributes to their achievement, and their achievement in turn influences his pride.

Sets of Variables like this (similar to one another but belonging to different people or time points, or countries, or firms …) are very common.

Some people, especially statisticians, use the word “variable” only for sets of Variables like these. That can make things simpler in statistics, but in Theories of Change we often have individual Variables as well as sets of them. Theorymaker suggests calling these sets “for-each Variables”: treat them like one Variable but remember, really it is a set.

“^^”: “for-each-time” Variables which repeat across time

A “^^” symbol shows that a Variable is actually a set of discrete Variables, stretching across time, e.g. across the life of a project. This kind of set is also called a “for-each-time” Variable; we draw just one though we know it actually represents several ℹThis could be a discrete set of Variables, e.g. one for each day, or, in principle at least, the “for-each-time” Variable could mark off a continuous stretch of time which we could measure at any given second or micro-second, like a patient’s temperature.

In Theories of Change, unlike in statistics, we assume (unless told otherwise) that Variables exist just once in time, e.g. a voter’s decision to vote a certain way on a particular election day with a specific date,, like this.,

Variable sets which stretch or repeat across time can be replaced with “for-each-time” Variables.

These two diagrams are equivalent. Here, the set of three Variables, one for each day, has been replaced by a single “for-each-time” Variable.

“^”, and related symbols, for individual events

A circumflex symbol (“^”) says that a Variable is defined just once, at a single point in time.

What if we need to make it clear that a particular Variable represents an individual event? These symbols can be useful:

^ = a discrete Variable which happens just once, at some time during the time period in question

^_ = a discrete Variable which happens just once, at the beginning

_^ = a discrete Variable which happens just once, at the end

We already saw ^^ for a discrete Variable which repeats many times, e.g. daily.

In this Theory, the first and third Variables take place at single time-points.ℹThe Variable in the middle is probably a “memory” Variable.

Many traditional Theories of Change are sliced into “phases” which define when the Variables within it start and end. With more flexible Theories, such as those you can make with Theorymaker, we can’t assume that a project has strict phases.ℹor that there is a “time axis” within the Theory of Change We need other ways, like these symbols, to show a Variable’s timing.

“~”: “for-each-time” Variables which stretch continuously across time

A “~” (“tilde”) symbol shows that a Variable is actually a continuous set of Variables, stretching across time, e.g. across the life of a project. This is called a “for-each-time” Variable; we draw just one though we know it actually represents very many ℹ (actually, an “infinite number”) . Continuous “for-each-time” Variables mark off a continuous stretch of time which we could measure at any given second or micro-second, like a patient’s temperature.

Continuous for-each-time Variables are distinguished from discrete ones which are marked with “^” and related symbols.

The “~” symbol in the intermediate Variable below shows that trainee skills are conceived of as stretching right from the beginning to the end of the project. You could test a trainee any time you choose. (The first Variable only makes sense at the beginning of the project and the last one only makes sense at the end).

Dashed arrows: for defining Variables in terms of others

Dashed arrows pointing to a Variable show that it is defined in terms of the Variables at the beginning of the arrows, not caused by them.

The arrows in a Theory of Change should show causal influence. But the last Variable above is just the sum of the other two, ℹAssuming there are just two Regions in the country by definition - so we should use dashed arrows:

Where necessary, you should specify the function involved – in this case, logical AND.

Or omit the defined Variable completely and group your Variables visually using a box:

Note that where we had a “mathematical” function underlying the causal influence, we have “mathematical” function underlying definitions. The two cases are exactly parallel.

Dashed borders: for Variables which are defined in terms of others

A dashed border around a Variable shows that the Variable cannot be independently measured because it is defined in terms of others. So if you’re planning to count the number of girls and the number of boys, it doesn’t make sense to also ask for the number of children as if it was additional information.ℹDashed lines can be used too. .

We already saw that to include a defined Variable in your Theory, you can use dashed arrows. But here, we have also added a dashed border around the last Variable.

Again, where necessary, you should specify the function involved – in this case, logical AND.

If you already have “indicators” for the defining Variables, there is no need to seek additional “indicators” for the defined Variable as well. The evidence is shared.ℹBut this Variable is just as real as the others. Definition and causation are about relationships, not Variables.

General and specific contexts

Theories can be quite general - e.g. they can make claims about how some generic child will behave. We can also apply them in more specific contexts too, for example to derive a Theory just about boys in Hungary in 2018, or even just about a specific boy, or about a specific boy on a specific day. ℹIn Theorymaker, we don’t make a hard-and-fast distinction between general and specific Theories: it’s a matter of degree.

A more generic or general Theory is useful in a wide range of contexts. But we can’t be too sure it will apply 100% in any specific context; obviously there will be exceptions (for example, a Theory about child behaviour might not work for some specific children). Sometimes the Theory will specifically include additional Variables (e.g. child’s anxiety level) which help to catch some of these exceptions and make it more useful in a particular context. But sometimes it won’t.

The whole point of simple Theories is to give relatively simple, rule-of-thumb causal information which might need to be refined with additional, perhaps more specific, Theories.

So we can define a Context as, in general, a set of Variables; and, in particular, as a set of Variables with additional restrictions, e.g. some or all of them are forced or restricted to specific values or levels.

Later, we will see how Variables can appear from nowhere, or be created and destroyed, and how to gather information on Variables we never expected. For example, the Variable “attitudes to Presidents using Twitter” did not really exist in 2015 and certainly did not exist before Twitter.

Combining contexts

The context in which a particular Theory applies is the intersection of the contexts of all its constituent bits.

… and this theory is valid only for children …

… giving a new Theory which is valid only in the context which is the intersection of the two contributing contexts: male children.

ℹ If even one Variable in your Theory has a fixed time, they all do. foot-

SECTION 4: Different kinds of influence

This section (work in progress) is about the kinds of influence which Variables can have on one another: how and how much. We encounter words like “linear”, “necessary” and “interaction”.

Problem: Specifying the kinds of influence between Variables

This simple Theory tells us that some Variable(s) influence another, but we want to know how: what level of the consequence Variable to expect for every combination of the levels of the Variable(s) which influence it? However, this seems like a hopeless task. For one thing, most social scientists assume that the causal connections must have the form of precise numerical functions - even when we only have imperfect knowledge of them.

Maybe student satisfaction = student's feeling of living up to expectations. This describes a linear relationship. But why should the relationship be linear? There are uncountably many possible functions from a numerical Variable to another. Will we ever have enough information (or time) to establish a really robust theory about the exact shape of this function? And what happens when we have more than one influence Variable? The (arbitrarily complicated) function from living up to expectations to student satisfaction might be different for every different level of some additional influence Variable. A real Theory of Change is composed of many such simple Theories; are we really supposed to identify (or carry out ourselves) serious research which gives us precise formulations of each of these functions? No, we don’t have the time or money.

As evaluators, we need:

- serious, clearly-defined ways to roughly specify the influences which Variables have on one another, (like “frustration increases as stress increases”)

- rules for doing “soft calculations” with such statements,

- rules for roughly working out “effect”, “contribution” and “impact” based on a Theory and some data

- and we need all this to work for all the different kinds of Variable (and influences between them) which we are likely to encounter in Theories of Change.

Solution: Different ways, especially rough ways, to specify the kinds of influence between Variables

Details added after the word “Influence: ” or appropriate symbols, on a Variable label, or on incoming arrows, specify what level of the consequence Variable to expect for every combination of the levels of the influence Variable(s).

Specifying influences tell us not just that some Variable(s) influence one another, but how.

Trying to do this with the precise, numerical approach typical for quantitative sciences is very problematic and sets the bar much too high. This has bad consequences.

- First, as evaluators and project staff we mostly just give up and don’t even try to specify even roughly the kinds of influence which are involved in our Theories of Change. We just draw boxes and arrows.

- Second, the Theories we draw have a kind of limbo status - although they are central to thousands of multi-million-dollar projects we can’t even say what they are; they are not meant to be taken seriously as theories by experts in the domain – so what are they, impressionistic sketches?

We will look at rough ways to specify influences. First, we will look at cases where just one Variable influences another. We can start by trying to formalise the kind of influence which is implicitly intended in most real-life Theories of Change: plus influences.

⊕: “Plus” influences

A “plus" symbol ("⊕") on an arrow from A to B means that any increase in A will lead to an increase, or at least no decrease, in B. ℹ And we specify that A has to have some effect on B, i.e. the influence of A on B is not completely flat. Most influences in real-life Theories of Change are”plus". In Theorymaker, we assume that if the type of influence is not specified, assume it is “plus”. So normally, we only bother with the ⊕ symbol when we need it to avoid ambiguity. ℹ Plus, maybe there is an implication that any likely Difference on the influence Variable will lead to a Difference on the consequence Variable which has practical significance . Otherwise, we should only use a thin arrow.

How warm you feel depends partly on the strength of the sun. These two diagrams are equivalent; in the second, the “plus” symbol is used instead of words.

These are usually called “positive” rather than “plus” influences, but that is confusing when the consequence Variable has negative valence, e.g. what is a “positive influence on mortality”? That’s why we prefer the word “plus” in Theorymaker.

“Plus” influences can be specified between any directed Variables - that’s a majority of Variables in real-life Theories of Change, including “false/true” Variables. They are essentially what a mathematician would call “positive monotonic functions”.

“Plus” is just the Theorymaker word for “positive monotonic” in maths.

⊖: “Minus” influences

This arrow could be misleading because strength of wind makes us feel colder, not hotter.

We prefer to refer to “plus” and “minus” influences rather than “positive” and “negative” influences, because the latter can be misleading when referring to Variables which we don’t like - for example, what is a “positive influence on mortality”?

Theorymaker uses a blue arrow and/or a ⊖ symbol to mark minus influences: any increase in wind means a decrease in subjective temperature.

Minus influences work with false/true Variables too, just as plus influences do.

“Plus” (or “Minus”) influences with false/true Variables

As “plus” (and “minus”) influences are defined on any directed Variables, in particular they work for “false/true” Variables.

If we conceive of wearing warm clothes as a false/true Variable, in the right circumstances, you will feel warmer when you are wearing warm clothes, other things being equal. So this is a “plus” influence of a false/true Variable on a lo-hi Variable.

Later we will look at the influence of two or more false/true Variables on a false/true consequence Variable.

If we also conceive of sweating (as well as wearing warm clothes) as a false/true Variable, increasing the sunshine (in the right circumstances) leads to sweating. So this is a “plus” influence of a lo-hi Variable on a false/true Variable.

If we conceive of both as false/true Variables, then in the right circumstances, you’ll sweat if and only if you are wearing warm clothes. Some real-life Theories of Change consist only of these kinds of Variables.

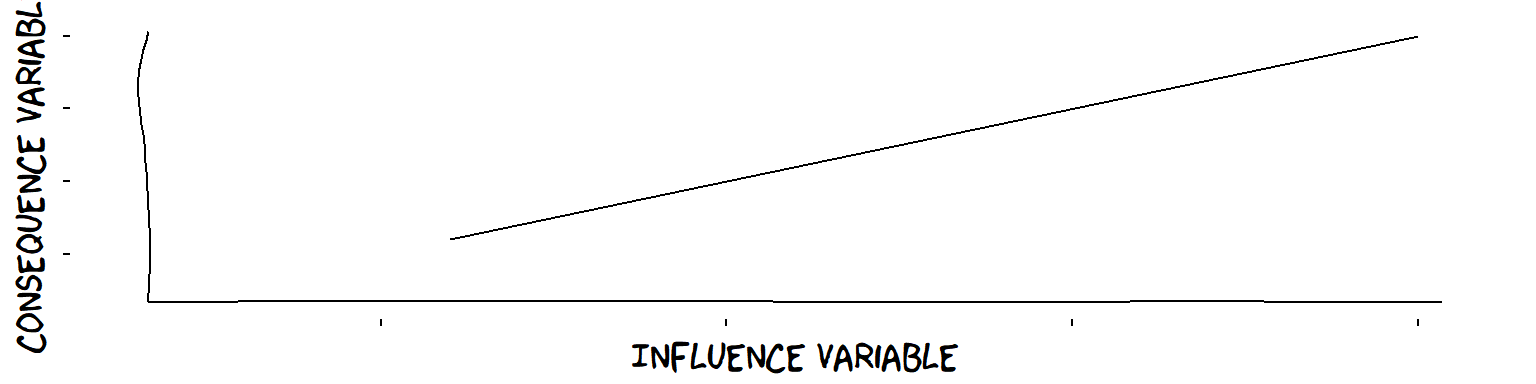

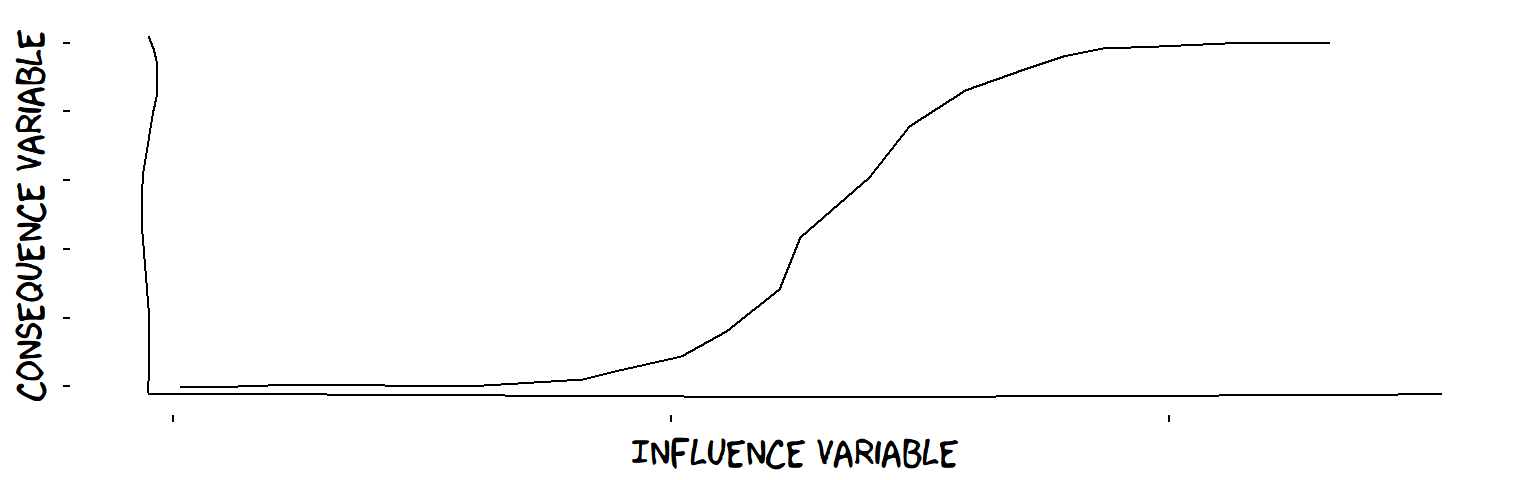

A “plus” influence is probably not linear

The “⊕” symbol tells us that the influence of support for the law among the population is “plus”, i.e. an increase in one leads to an increase in the other. But is this influence linear (e.g. a 1% increase in population support means that support among legislators always goes up by a fixed amount, say, 2%)?

A linear influence

And what if we can’t even agree how to measure or express the Variables in terms of numbers? ℹ It seems quite natural to draw a rough graph to illustrate plus influences. But if these are only lo-hi Variables, drawing graphs suggests a level of measurement (interval or ratio) which we don’t have - see (Stevens 1946). So we have to be careful how to interpret them. We musn’t get tempted to say, for example, that two pairs of points are the “same distance” apart. For instance, linear influences can’t even be properly defined for lo-hi Variables (so we can’t really even draw graphs either).

Well, Theorymaker argues that the idea of a “plus” influence is much clearer and more universal than the idea of a linear influence. “More of this means more of that” is pretty easy to understand and not impossible to verify. Real-life Theories of Change are full of plus influences.

A plus influence

Not a plus influence, because of descending section

All of this applies to “minus” influences too.

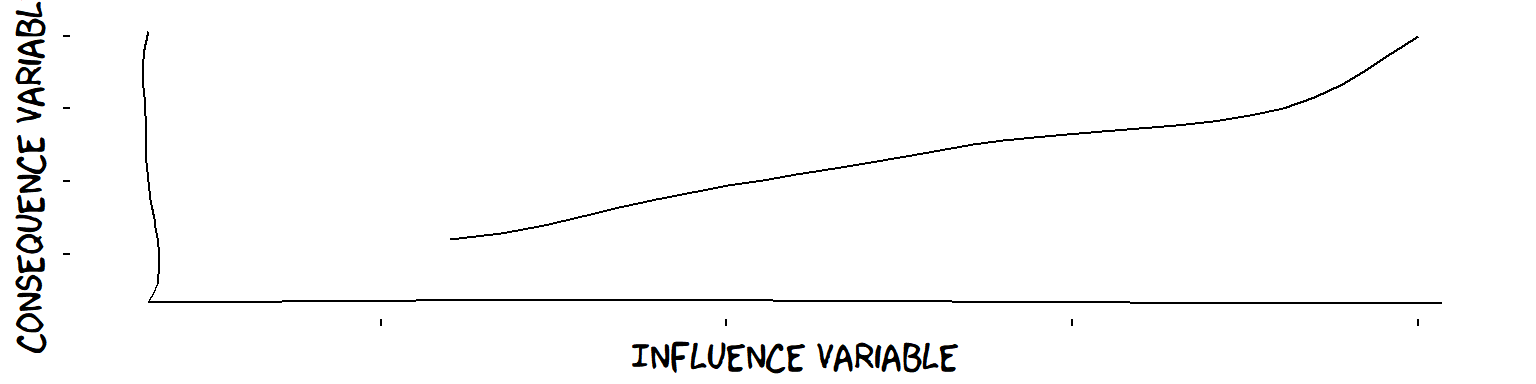

Special kinds of “plus” influence with just one influence Variable

- S-influences (from continuous Variable to continuous Variable) ℹWhen all the members of a set of more than one influence Variables (independently) have plus influences on a lo-hi Variable, the influences are probably S-shaped. This is the equivalent of linear superposition amongst interval Variables. For example, characteristics of parenting style and size of apartment and IQ at age 10 might all have a plus influence on happiness at age 25. If happiness is scaled from, say, 0 to 100, then these influences must gradually decrease as happiness increases, otherwise we would break the ceiling of 100.

An S-shaped influence

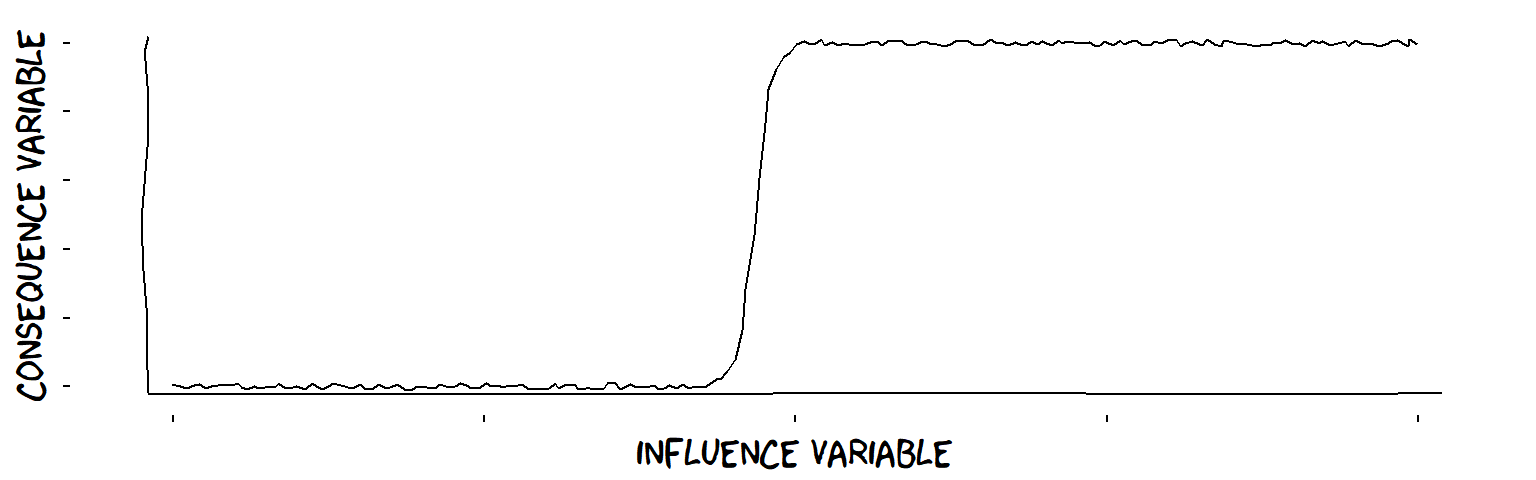

- Threshold influences (from continuous Variable to continuous Variable) …

A threshold influence

- Threshold influences (from continuous Variable to false/true Variable) …

…

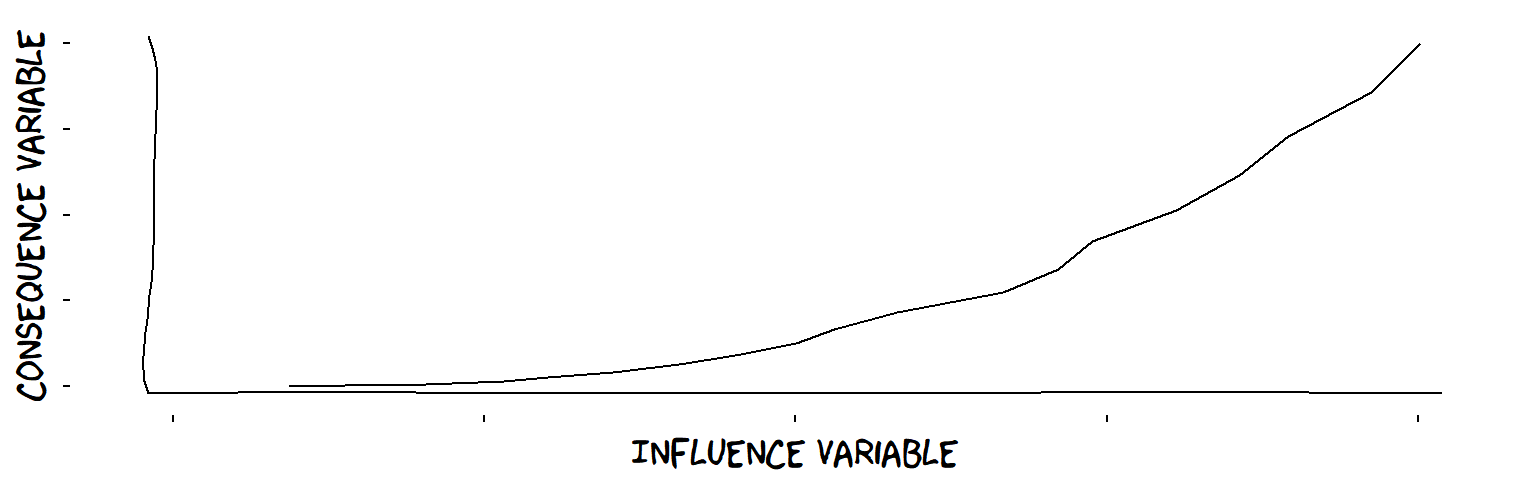

- Increasing influences

An increasing influence

- Decreasing influences

A decreasing influence

For each of these, there is a parallel for “minus” influences too.

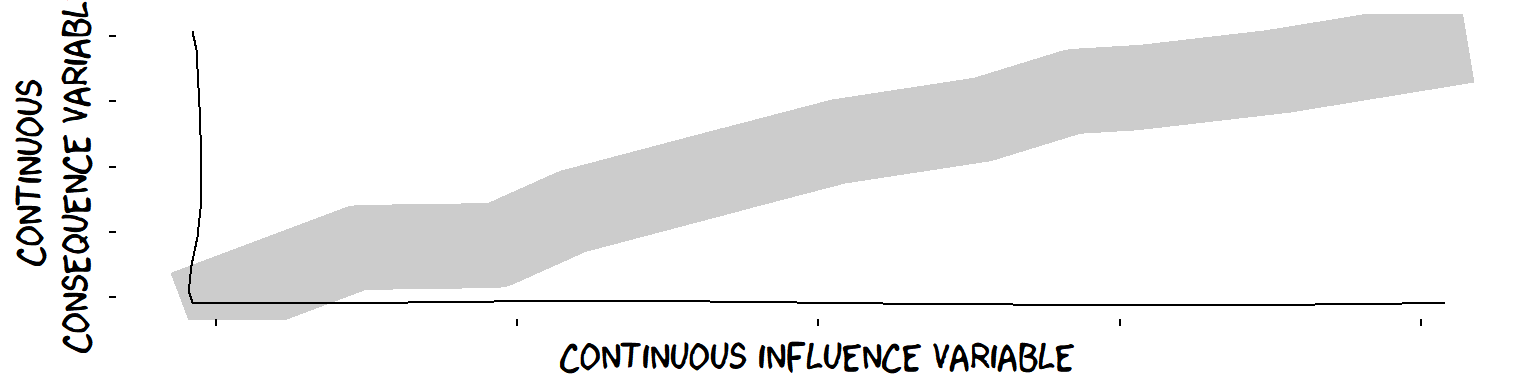

We can also emphasise uncertainty around the consequence Variable by showing a band rather than a line

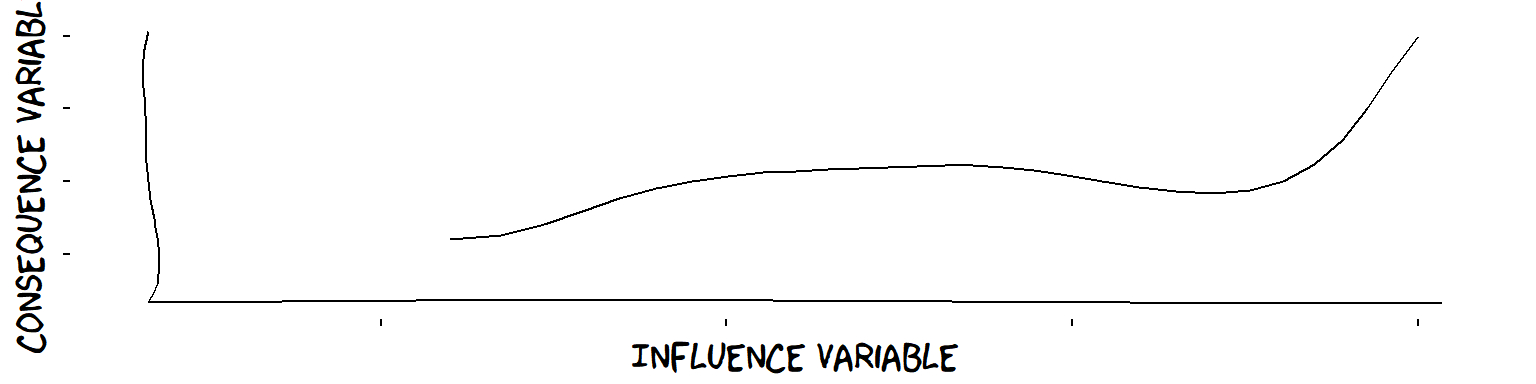

Influences which are not “plus” or “minus”

“Plus” (and “minus”) influences are a very wide class which cover very many of cases we see in real-life Theory diagrams. But it is worth mentioning a few which are not.

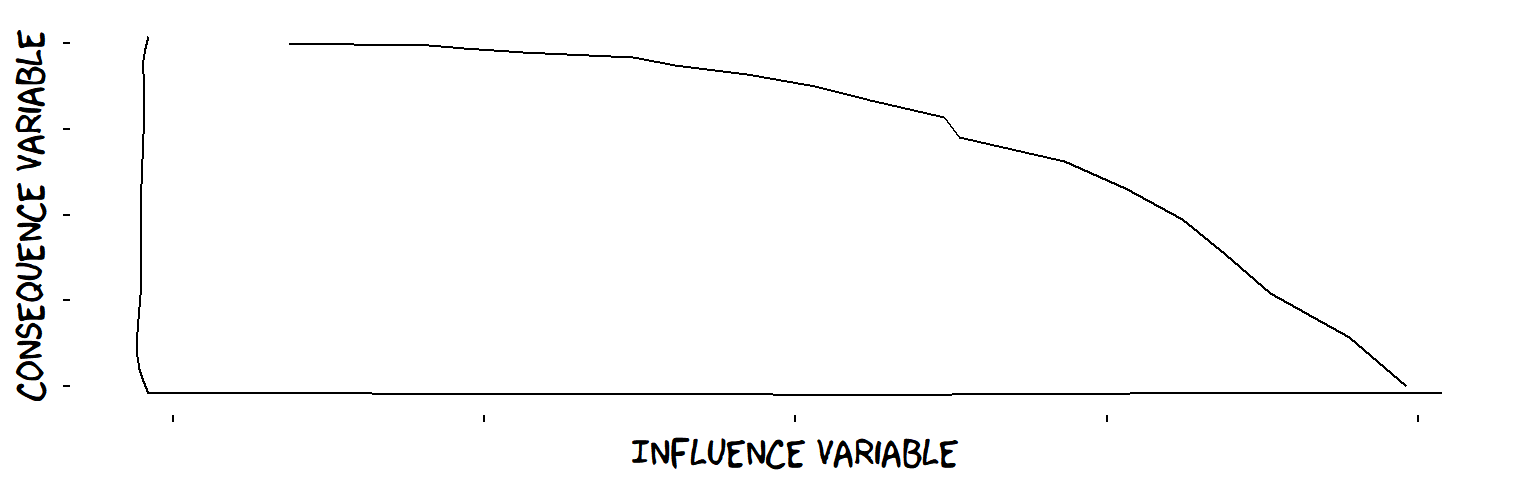

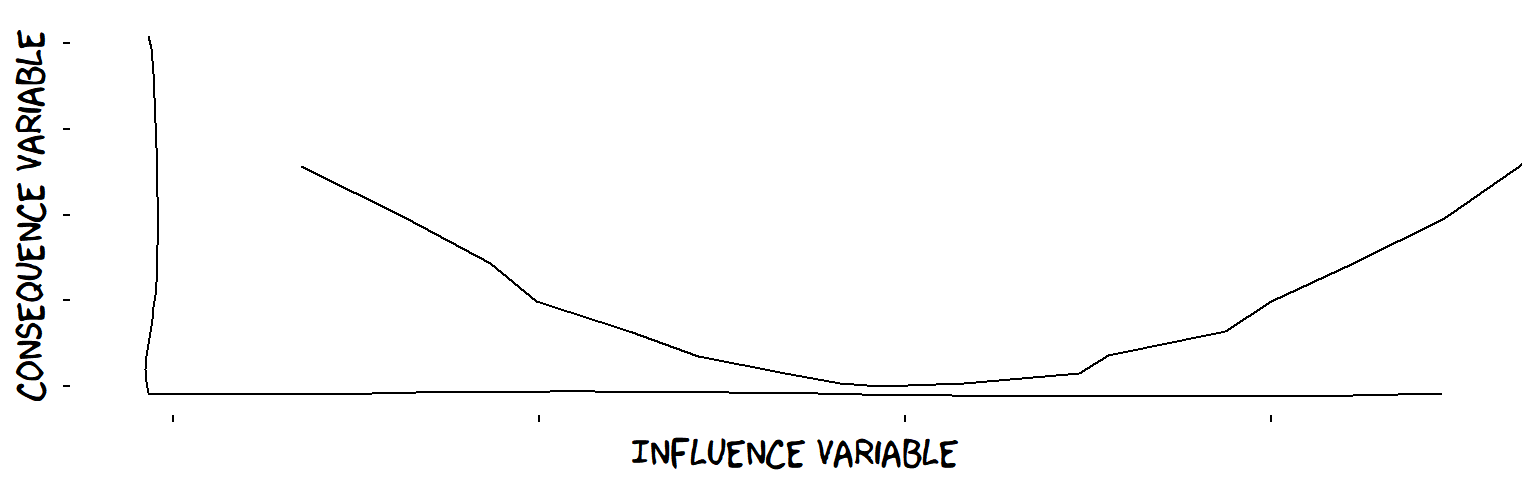

- U-shaped influences

A U-shaped influence

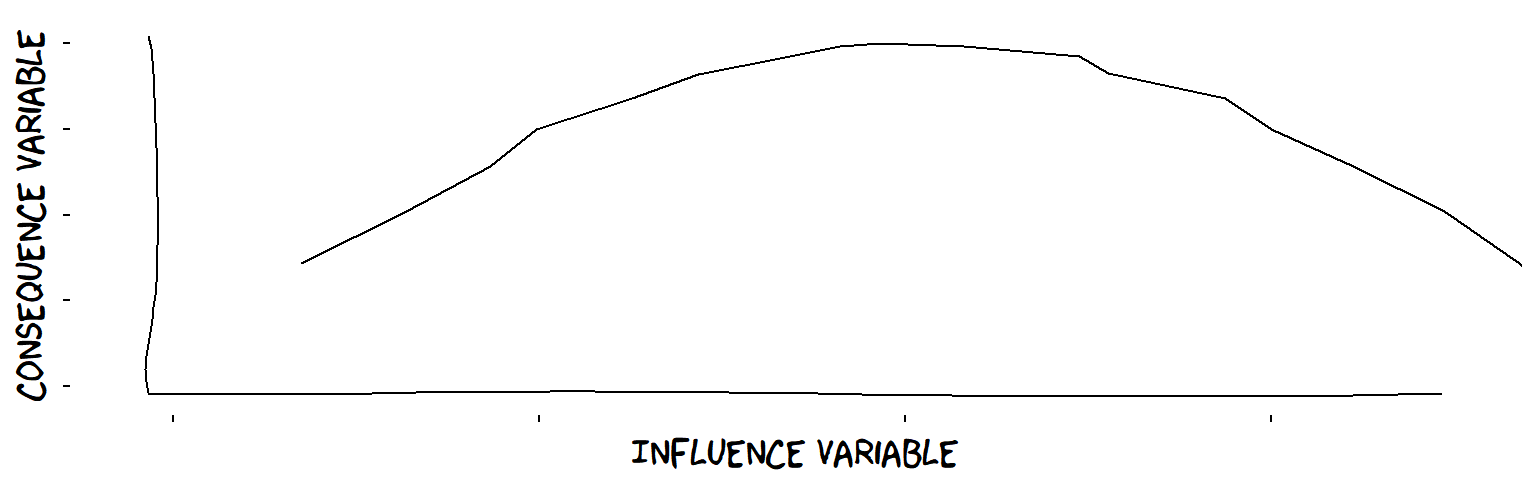

- Inverted-U influences

An inverted-U influence

Example: student performance in relation to level of pressure (not too much, not too little).

More than one influence Variable

We already saw that a simple Theory tells us what level of the consequence Variable to expect for every combination of the levels of the influence Variable(s).

In general, the influence of, say, feeling supported and liked might be different for every different level of living up to expectations.

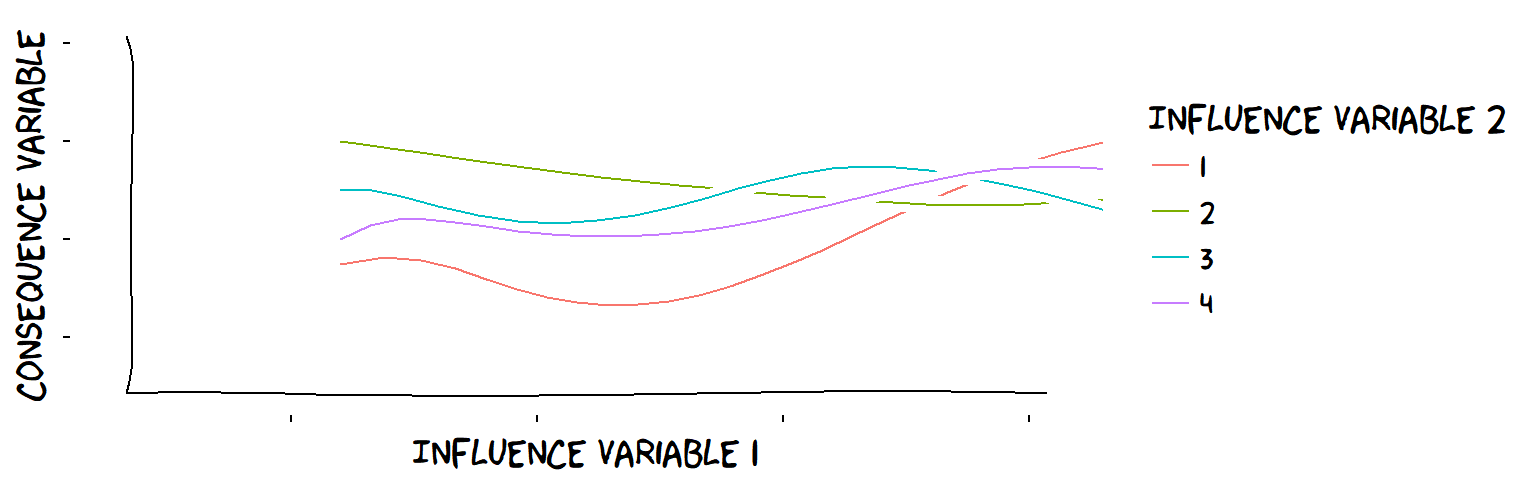

A very confusing interaction between a continuous influence Variable and a discrete influence Variable

Independent influences

If a set of Variables have independent influences on a consequence Variable, this means that the influence of each Variable is completely unaffected by the levels of the others. ℹ The influences of independent interval Variables can be literally added up. This is called “linear superposition” and is part of what people mean when they use the word “linear” when talking about Theories of Change.

Assuming independence makes life easier because we can break down their collective influence into separate influences, one for each Variable, which work independently, of one another. This is simpler to think about and work with. However, it’s a big assumption which is nearly always false!

There are easier ways of thinking about issues like independence – see next slide.

When people talk about linear influences in Theories of Change, they often mean the idea that, if a Variable has more than one Variable influencing it, the total influence on it is just the sum of the influences of the individual influence Variables. In other words, to know the influence of “Support among population” on “Support among legislators”, it is not necessary to know anything about “Pressure from party leadership”, and vice versa.

“Linear” in this sense is short for “Linear superposition”. Quantitative scientists like it because it makes things easier to calculate. But it would be “fake science” to think that therefore, that’s how the world is. Suppose for example that, when interpreting support from the population, individual legislators consider what the party leadership thinks. For example, if the leadership is totally against, pressure from the population might have a negative influence on legislators.

“Robust influences” - a rough way to talk about “independence”

An influence on a consequence Variable is “robust” if the type of influence (“plus”, “minus” etc.) does not change when the levels of the other influencing Variables change. ℹ So the other Variable(s) might affect the fine detail of the influence but they don’t change its original type. This means that whether an influence counts as “robust” can depend on how it is characterised. So an influence which is characterised as “plus” might be robust, but if it was characterised as “additive” it might not be, because characterising an influence as strictly additive is stricter. It is less likely to remain additive at every level of the other influence variables. Influences which are specified as numerical functions - for example linear, exponential, etc., are only separated if they are actually independent.

This is a much more realistic assumption than actual independence; much more likely to actually happen in real life. We mark a robust influence on the individual arrow. If an influence is, say, overall plus but not for every level of the other influencing Variable(s), we can mark it on the arrow but add a “?”.

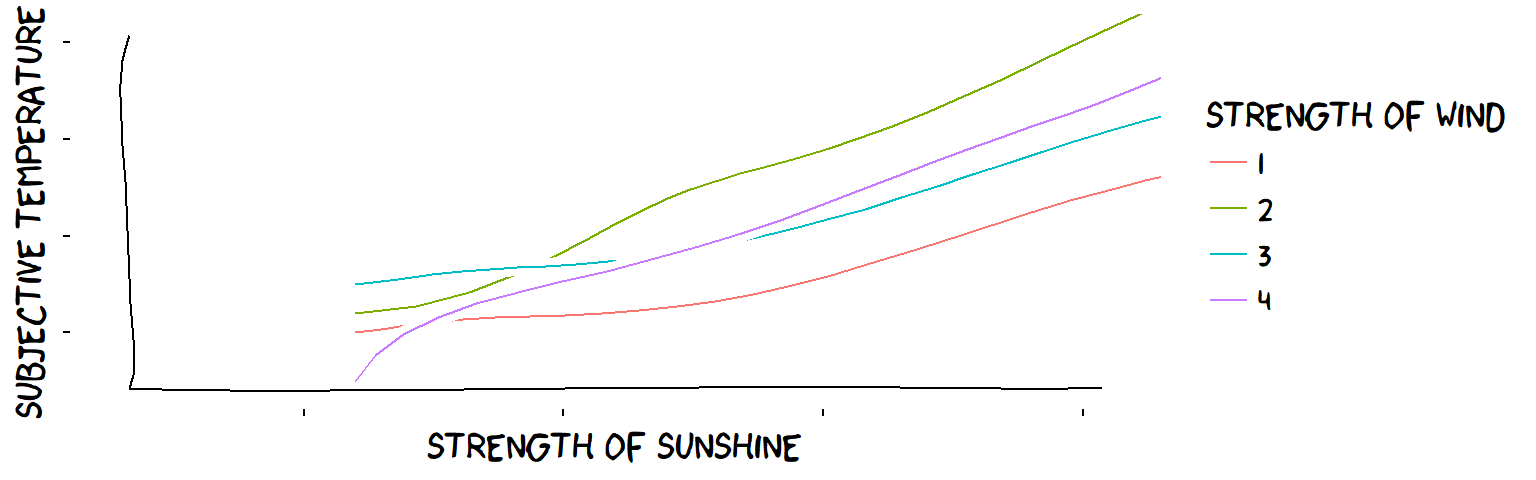

So in this case, the influence of the wind on subjective temperature might be affected somehow by the strength of the sunshine, but it remains “minus” whatever the sunshine (and the influence of the sunshine is “plus” regardless of the wind): the influences are shown on the arrows.

Wind strength certainly affects the influence of sunshine on temperature; they are not “independent”. But, for each level of wind strength, the influence of sunshine remains “plus”, so we can say that "the ‘plus’ influence of sun and the ‘minus’ influence of wind are both ‘robust’.

The two influence Variables are not strictly independent but they are ‘Robustust’ when characterised as ‘plus’ and ‘minus’ influences

Influence symbols in Variable labels

An influence symbol in a Variable label (rather than on the arrows) says that all the influences are of this type (and are ‘robust’).

Using “?” to show that an overall influence is not robust

If one influence on a Variable when ignoring or aggregating its other influences Variables is, say, “plus”, we can say that it is “overall plus”; and if it is not robust, because the influence is not always plus for some levels of the other influences, we can mark it on the arrow but with a “?”.

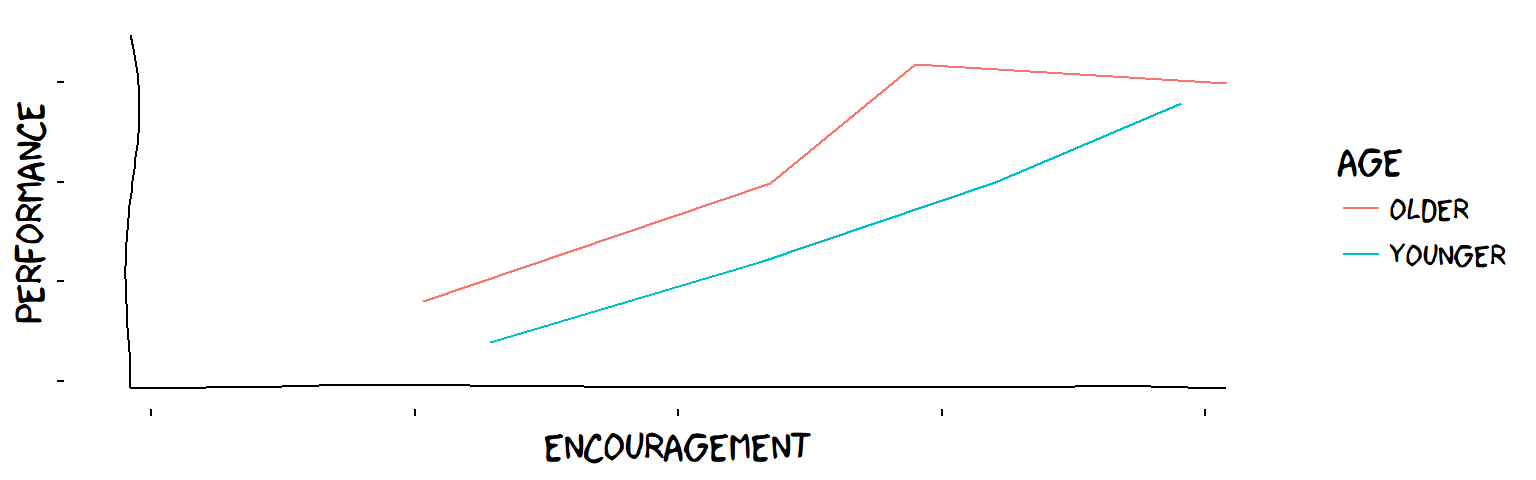

The influence of encouragement and age on children’s performance on some task

Overall, the influence of encouragement on the children is plus ℹ This is made-up data, as usual! , but not robust: for one age group, increasing the level of encouragement does not always bring increases in performance (older children respond poorly to too much encouragement).

This is information which is practically useful. It means, before you considered age, you could adopt the overall rule (“more encouragement is always good”). When you enriched your theory to include the age groups, you realised you had to consider the child’s age when applying this rule.

The influence of age, on the other hand, is plus, and robust (older children always score better, regardless of the amount of encouragement). If the blue line had crossed the red line, the influence of age would not have been robust, and we would have to add a “?” to the other symbol too.

Defaults: assume that influences are “plus” and “robust”

We assume, by default: 1) if an influence is not described, it is “plus”; and 2) when there are several influences on a Variable, they are robust

… so in particular, if there are several influences on a Variable which are not specified, they are robust, “plus” influences.

If you’re reading a Theory of Change “in the wild”, most times you can guess that this is what is meant (if indeed the authors of the Theory thought about it at all). ℹ “Robust” influences and “separation” are a generalisation of linear superposition and they are part of what people mean, mostly in a critical way, when they say “ah but you assume everything is linear”.

Combined influences: Joined arrowheads

Individually, number of staff and quality of management both have a positive (plus) influence on the success of the intervention. But if the management is poor, adding staff can actually make things worse, i.e. the influence of staff number is no longer plus, and the success is endangered. We say that the influences are not robust and the arrowheads are drawn joined.

We should explain more about this lack of “robustness” in the diagram or the narrative, so the reader has a rough idea of what the different combinations of the influencing Variables does to the consequence Variable.

Combined influences: synergy

These influences are all plus, and they are robust because, for each Variable, whatever levels the other Variables have, its influence on the consequence Variable is still “plus”.

But, often we assume a kind of synergy: that the total influence of one Variable on the consequence Variable always gets larger as any of the other Variables increases. We can call this “synergy” - a subtype of multiple, separate, plus influences. ℹ But how coherent is this idea really? If the consequence Variable is lo-hi, the influences have to drop off asymptotically at some point. And, should we draw the arrowheads separate or together?

Combined influences: maximum and minimum

An influence which is the maximum of two Variables

An influence which is the minimum of two Variables

A very interesting special case is when all three Variables are false/true.

Combined influences: two false/true Variables (necessary & sufficient conditions)

When we have two (or more) false/true Variables influencing a false/true consequence Variable, there are not many different combinations. We find that if both are “plus” and/or “minus”, the influences cannot be robust. There is always a kind of dependence.

The influence of Spark on Fire is plus, and likewise for Oxygen. But both are necessary, so if one is absent, the other is no longer plus: these influences are not robust. We draw the arrowheads together and write “AND”.

The influence of either flamethrower is plus. But both are sufficient, so if one is present, the other is no longer plus: these influences are not robust. We draw the arrowheads together and write “OR”.

We can think of AND and OR as a special case of, more generally, “maximum” and “minimum” combinations of influence Variables.

See also INUS condutions.

Delays

Generally, influences between Variables with a relative or absolute time should have a delay specified with “Delay: ” - in general on the consequence Variable, or on the arrow if the influence is robust. The delay specifies how much later is the consequence than the influencing Variable (this is not necessary if relative or absolute time is already specified on the Variables). This is necessary also in the case of repeated or continuous Variables.

Specifying delays between repeated and continuous Variables can be quite challenging:

Feedback loops

If you can follow the arrows from one Variable back to itself, the path is called a “feedback loop”.ℹBasically all the causal networks in Theorymaker are really DAGs - Directed Acyclic Graphs (Pearl 2000) . Loops are only possible with for-each-time Variables, which are actually sets of Variables, so there is no actual loop from a Variable at a single time-point to itself, otherwise it would be causing itself.

The Variables involved must stretch across time; the “~” in front of a Variable name shows this. (The ↺ symbol is explained later.) Variables with a fixed time-point, common in very simple step-by-step Theories such as most “logic models” can’t take part in a feedback loop.

The delay may need to be specified if not obvious.

🞕: Influences which “optimise” the level of the consequence Variable (in feedback loops)

Here, program staff know the volunteers are most effective at a medium level of excitement, so if the volunteers are too lethargic, they offer plenty of inspirational input and if they are too excited, they try to calm them down.

We use the Theorymaker symbol 🞕 to show that the influence which controls the Level of a Variable in a feedback loop is aimed at maintaining some kind of optimum, pushing up low values but bringing down high ones. It is unlikely to be a simple “plus” influence aka “positive feedback”. Why not?

↺: “Memory” Variables

How does sea temperature depend on sunshine?

(The ~ sign says this Variable repeats continuously across time.)

… but this suggests that the sea temperature plummets every time the sun goes behind a cloud.

In fact the sea takes a long time to cool much: to know its temperature at one moment, we need to know about sunshine and the temperature in previous moments: it’s a “memory” or “stock” Variable.

An arrow from a Variable to itself or a ↺ symbol in the body of the Variable indicate a memory Variable: one whose level depends partly on its own previous level.

!flow: “Flow” configurations with “Valve” Variables

A “!flow” symbol on a grey arrow from X to Y with a tapering arrow incoming from another Variable V, can be understood as a “Flow” configuration, simulating the way that material flows from X to Y under the control of the “valve” Variable V.

This can be translated into a pair of more basic Theorymaker concepts: an “Upstream” influence on the upstream Variable and a “Downstream” influence on the downstream Variable.

Here, the arrows do not represent causal relations but are like pipes for transferring material, as if the apples actually flow down those pipes. The diamond shapes represent “flow” or “valve” variables. You can imagine they could be just closed or open, or limit flow to a certain amount. (These kinds of elements are central to “stock-and-flow” diagrams which are familiar from Systems Dynamics. ℹ (The oval shape at the start is called a “stock” and the final oval shape a “sink”.) The arrows are sometimes drawn as double lines.)

The direction of the arrows are like the direction of gravity; when the valves open, the apples flow, and the wider they open, the faster the apples flow. So the green variables are measured in numbers of apples, whereas the flow variables are measured in apples per minute.

It is possible to reproduce idea of flow in Theorymaker. Here is how the stage from warehouse to shop ℹ (the others are simpler) is represented using more basic Theorymaker elements; all we need to do is clarify what the “upstream” and “downstream” influences are. They are explained if you hover over the “Fundamentals” button above.

So we have succeeded in translating a flow of material in terms of basic Theorymaker elements using only our more fundamental concept of causation. ℹ Real-life “stock-and-flow” diagrams usually include information links as well. These are no different from normal Theorymaker arrows and so need no special treatment. When combining these ideas to translate larger diagrams such as the one on the left, the levels of the intermediate Variables (Warehouse and Shop) are determined by two influences - a Downstream influence as the recipient of apples and an Upstream influence as the source of apples for the next Variable.

Definitions of the pair of “Upstream” and “Downstream” influences which we need to model stock-and-flow diagrams.

\[Upstream(V,W_{t1})=max(W_{t0}-V,0)\]

So if, for example, time is being measured in seconds and flow Variable V is being measured in apples per second, you can calculate the level of apples in the upstream container W (“Warehouse”) by using this Upstream function: the level will be the level of W in the previous second, minus V, unless the level of W in the previous second was 0, in which case the level of W now will also be zero - because we have run out of apples and we can’t have a negative number of apples.

\[Downstream(V,S_{t1},W_{t0})=S_{t0}+min(V,W_{t0})\]

Similarly the level of apples in the downstream container S (“Shop”) can be calculated by using this Downstream function, which also depends on the upstream Variable - because the level of S will be the level of S in the previous second, plus either V, or the number of apples left in W in the previous second if this is less than V.

“Source” and “Sink” Variables - the first and last in the chain - are trivial; their levels are always “infinity”, so they never change and we don’t need to bother calculating them.

These definitions only deal with discrete time. To deal with continuous time, one would have to generalise these functions.

The case of the camel’s back: a memory influence leads to a threshold influence

A camel’s back is a combination of a memory influence followed by a threshold influence

There are plenty of real-life instantiations of this kind of causal chain.

Good practice: Noting the evidence for specific influences, on the arrows

In this diagram, the evidence for the collective influence of both Variables has been added to the “influence” part of the consequence Variable’s label. And an additional piece of research which supports the influence of just one consequence Variable has been noted on its arrow.

See also Dunford’s suggestion to use arrow colours to represent the strength of evidence (Dunford 2012).

Thicker arrows for bigger influences

The thickness of the arrows in a Theory of Change can be used to show the relative strength of the corresponding influences.ℹThis assumes that we can actually say or calculate what the relative strength of the influences are, see later.

Here, we want to show that the influence of the other campaign is much less important than public opinion.

We can do the same thing with the sizes of bare arrowheads for unnamed influences.

Here, we want to show that the other influences on public opinion are much more important than our campaign; and that the other influences on the legislators are relatively insignificant.

Do we assume that, if not otherwise specified, the presence of a normal-sized arrow on a diagram means that the size of the influence is not just tiny but of practical significance?

SECTION 5: Different kinds of grouping boxes

This section (work in progress) will show some different ways of using grouping boxes in Theories of Change.

| Contents of this section | |

| Grouping boxes to organise projects | |

| Arrows from grouping boxes | |

| Arrows to grouping boxes | |

| Arrows between grouping boxes | |

| → Next section |

More kinds of grouping boxes coming soon!

Grouping boxes to organise projects

In general, a diagram in which several Variables have the same text as part of their labels is equivalent to one in which those Variables have the text removed from their label but they are surrounded by a grouping box with that text as its label. This can be useful e.g. to organise projects, to group Variables into sectors, phases, sub-projects, regions etc.

Arrows from grouping boxes

We often see this kind of diagram in the wild.

An Arrow from a Box is usually a kind of a shorthand which replaces Arrows from the Variables it contains…

… so it is equivalent to something like this:

… but … Are the influences supposed to be “plus”? How do they combine - are they all “robust”? Perhaps one or two of the Variables in the box have no influence? Perhaps the Variables in the box combine into one single Variable which then influences the consequence Variable?

The minimal interpetation is this:

An arrow from a box to a Variable V means that at least some of the Variables in the box have some kind of influence on V.

Arrows to grouping boxes

An Arrow to a Box is usually a kind of a shorthand which replaces Arrows to the Variables it contains…

… so it is equivalent to something like this:

Arrows between grouping boxes

An Arrow between boxes is usually a kind of a shorthand, perhaps just to avoid drawing too many arrows …

… so it is equivalent to something like this:

… but … are these supposed to be “plus” influences, or what? Were all the possible arrows really implied? Are the Variables in the first box combined before influencing the Variables in the second?

The minimal interpetation is this:

An arrow between boxes means that at least some of the Variables in the first box influence at least some of the Variables in the second, in some way.

SECTION 6: Theories of Change; Value; multiple stakeholders

In this section, (work in progress), we see how a Theory of Change can be understood as a Theory about what we have to do to get what we want: How our ▶ Intervention can lead to something we ♥ value. We will look also at what happens when we have multiple stakeholders.

A Theory of Change can have more than one valued Variable

Lots of Theories of Change have only one valued Variable,

- the last one in the chain

- often called “Top-level Outcome”, “Goal” etc.

But in the real world, you (or maybe other stakeholders) often value more than one Variable.

The laptops might have other valuable consequences for the students, so mark them as valuable, e.g. “♥” or even “♥ ♥”.

Intemediate Variables can be valuable too

In the real world, you (or maybe other stakeholders) might value Variables which are not at the end of a chain. If so, mark them as valued too, e.g. with a " ♥".

Maybe you would still be quite pleased with the project if it made a big difference to student self-confidence regardless of its effect on exam results:

Some intermediate Variables (like attending workshops) are really only means to an end.

But some are valuable in their own right: don’t undersell your project if it really does produce additional value! Add more " ♥" for Variables you value more!

Sure, someone can always argue “you only value self-confidence because it leads to something else, the things you really value”. But you can say that about any valued Variable, for example employment prospects. And then it is turtles all the way down.

Value, Intervention, belief in a Theory: three sides of the same coin

Intervention, Value, Theory - these are three sides of the same coin which we call Theory of Change.

So a Theory of Change is always somebody’s Theory of Change, just as a plan is always somebody’s plan. We call this person the “Actor” of the Theory of Change.

We can perhaps define any of the three in terms of the other two - e.g. we can define what we mean by a “valued” Variable A in terms of the other two concepts - plan and intervention. A directed Variable V is valued by a person P, if P always intervenes on other Variable(s) in such a way as to maximise V according to P’s Theory of Change.

If the Actor knows and believes the Theory of Change and intervenes with such-and-such effects, we can assume that they desire the effect of the intervention according to the Theory of Change.

I might randomly or excellently do something and it might have an accidental effect on variables I value, but that doesn’t make it into a plan or project, it wasn’t intentional and the effects wouldn’t be credited to me for praise or blame.

Often in evaluation, we need to know whether some act was intentional. And although intentionality has been very hard for philosophers to capture, this isn’t a bad approximation:

FundamentalsAn act is intentional if it is in accordance with the Actor’s Theory of Change.

Suppose for example you were observing a government agency trying to respond to varying levels of air pollution. To you, their behaviour seems really strange because they instruct people to burn more wood when high air pressure is tending to encourage the formation of smog. There are two possibilities:

- they aren’t actually trying to reduce air pollution. Perhaps they have some other aim - they value something different;

- or perhaps they do want to reduce air pollution, they just have a different Theory - from our perspective, a really bad one.

Similarly, suppose you are trying to understand better the Theory held by members of a campaign group. You will probably also want to observe what they do in different cases, and what measures they take in what order. But in order to understand that, you will need to know what they are aiming for, what they value. The two things go together.

Now for a third example in which the valued Variables and the Theory are clear but the intervention is not. Suppose you are observing a complicated field of battle with many forces involved. You can see various positions being bombarded at different times but you don’t know who is responsible. If you know the aims and Theory of the different forces involved, you can pretty quickly work out who is responsible for the bombardments.

So you could say that Theorymaker follows a utilitarian theory of motivation xx.

You think you know someone’s Theory and what they value, and you can identify their actions. But you are puzzled because they seem to frequently do things which don’t seem to be in their interest. Probably you will conclude that either you haven’t understood their Theory, or that, in spite of what they say, they are actually following a different, hidden Theory; or perhaps you will conclude that you have misunderstood what they value. Or perhaps they say they really only value gender equality and empowering teenagers, but you deduce they also have a personal agenda and are interested in developing their career and visibility (which would be quite understandable).

Intrinsic value

We already noted that a valued Variable does not have to be at the end of a causal chain. In fact, it can even be at the beginning of one.

This kind of thinking is very important for instance to understand spiritual and religious motivation. It is related also to the idea of the “humanitarian imperative”. From this point of view, the consequences can be more or less important, but certainly the process is important.

Multiple Actors: Who acts on what, who values what?

When different stakeholders or “Actors” interact, they are usually able to intervene on different Variables. This can be done by using different colours, or by writing the name of the Actor before the ▶ symbol, as we do here. We can use ♥ symbols, preceded by the name of the Actor who values this particular Variable, in the same way. ℹ♥ symbols are only assigned to stakeholders who are also actors - because only they can change anything?

Previously we have only seen Value ♥ and Control ▶ symbols used globally to show what “we”, the makers of the Theory, value and control. Now we are zooming out and taking a meta-perspective.

This kind of presentation can be really useful for realising that different Actors might do different things, or put different a different emphasis on the same things, because their motivations are different.

In this example, part of the trainer’s motivation is simply the act itself - of providing pro-bono training. All the Actors value the main outcome, improved teaching, whereas the increased pay to which the teacher is entitled after completing the training is a positive motivation for the teacher but a negative one for the Ministry. ℹ A later slide expands on this one, focusing on the fact that not just one but several teachers might be involved.

In this case, the Actors differ on two of the dimensions of the Theory of Change - what they control and what they value - but share the third aspect, namely the Theory itself, the one shown here. More generally, they might not.

See also (Hansen and Vedung 2010) on evaluation with multiple stakeholders, and (Van Ongevalle et al. 2012) for “actor-focused” evaluation approaches.

Re-evaluating value

Michael Scriven is one thinker who has often raised the question of which values are really valuable, and encouraged evaluators to (re-)evaluate officially posited values.

In the diagram, the two intermediate Variables, the planned Outcome and another interesting Outcome (which may or may not have been planned) are assessed in terms of value above and beyond the value expressed in the one Variable which was originally posited as being valuable (“♥”). The rules about how to assess them in terms of shared values, and indeed the shared values themselves, are marked here (spiral symbols) as inter-subjective: we can hopefully agree on them in retrospect but we can’t definitively specify them in advance.

Interestingly Chen & Rossi’s original paper (1980) on Theory-Based Evaluation focuses also on multiple goals ℹ(they say, why not include all the Variables which might be affected by the program, in the opinion of different stakeholders; why not assess value for each of different subgroups …) and the possibility of re-assessing originally posited goals.

FundamentalsOf course, merely drawing a picture tells us nothing about how this is actually to be accomplished in an evaluation. But if indeed values are not in the end distinguishable from facts, we shouldn’t necessarily need completely new methods.

SECTION 7: Effect and contribution: Doing calculations with Differences

This section (work in progress) is about how to calculate the contribution of some Variables on others.

We encounter words like “contribution”, “attribution”, “effect” and “impact”.

Statements: using double brackets and _ to report the actual (or “factual”) level of a Variable

All of these are Statements:

The last three examples give (optional) specifications of levels; two use symbols and the last one gives an explicit list.

The trailing "_" helps to distinguish Statements from Differences and also from summarised Differences.

These Statements are more or less equivalent to ordinary English sentences - the kind of sentence which can be right or wrong. But they use double-brackets to make clearer than ordinary English does which is the part which could be different. We can write them just as sentences or we can also include them in graphical Theories of Change.

This very simple Theory tells us that the opinion of the constituents might have some kind of influence on the passing of the law; and it also tells us that the support level was about 50% and that the law was actually passed. But the diagram doesn’t give any indication whether the law would have been passed had the level of support been lower, or even higher - so actually it isn’t much use to us as evaluators or managers.

If you compare the trailing “" in a Statement with how a Difference is formulated, you can imagine that the counterfactual level which would make this into a Difference, which could go after the "” or the “rather_than”, is missing.

Statements on their own are not enough to establish causation.

Differences: the Variable takes its factual level “rather_than” the counterfactual level, expressed within double brackets

Formulations like “Approval rating ((very good rather_than poor))” are called “Differences” - with a capital D. Meaning: the approval rating is very good, (rather than poor, which is perhaps what we were expecting).

When something happens to a Variable, e.g. we change its Level deliberately, its factual level becomes something different from what we otherwise expected (“the counterfactual”). So if we decide to stock up a food warehouse which has only 100kg of food with another 500kg, we can say: